Will Leeney

AI Research Engineer

Building an Agentic Researcher

StackOne specializes in building integrations, positioning the company strategically within the AI agent space. Since agents require real-world connections to function effectively — such as CRM systems, email platforms, and calendar applications — understanding developer practices becomes crucial. I constructed an agentic researcher to continuously monitor web activity for AI agent implementations.

The Problem: Why Building an Agentic Researcher Is Hard

The AI agent ecosystem encompasses numerous frameworks including LangChain, LangGraph, CrewAI, and Claude tools, with new options emerging regularly. Developers integrate these tools with services like Salesforce, Gmail, Slack, and HubSpot, but this information remains scattered across Reddit, GitHub, blog articles, and Twitter. The signal-to-noise ratio is overwhelmingly high across these distributed sources.

Day 1: Building the Agentic Researcher Pipeline

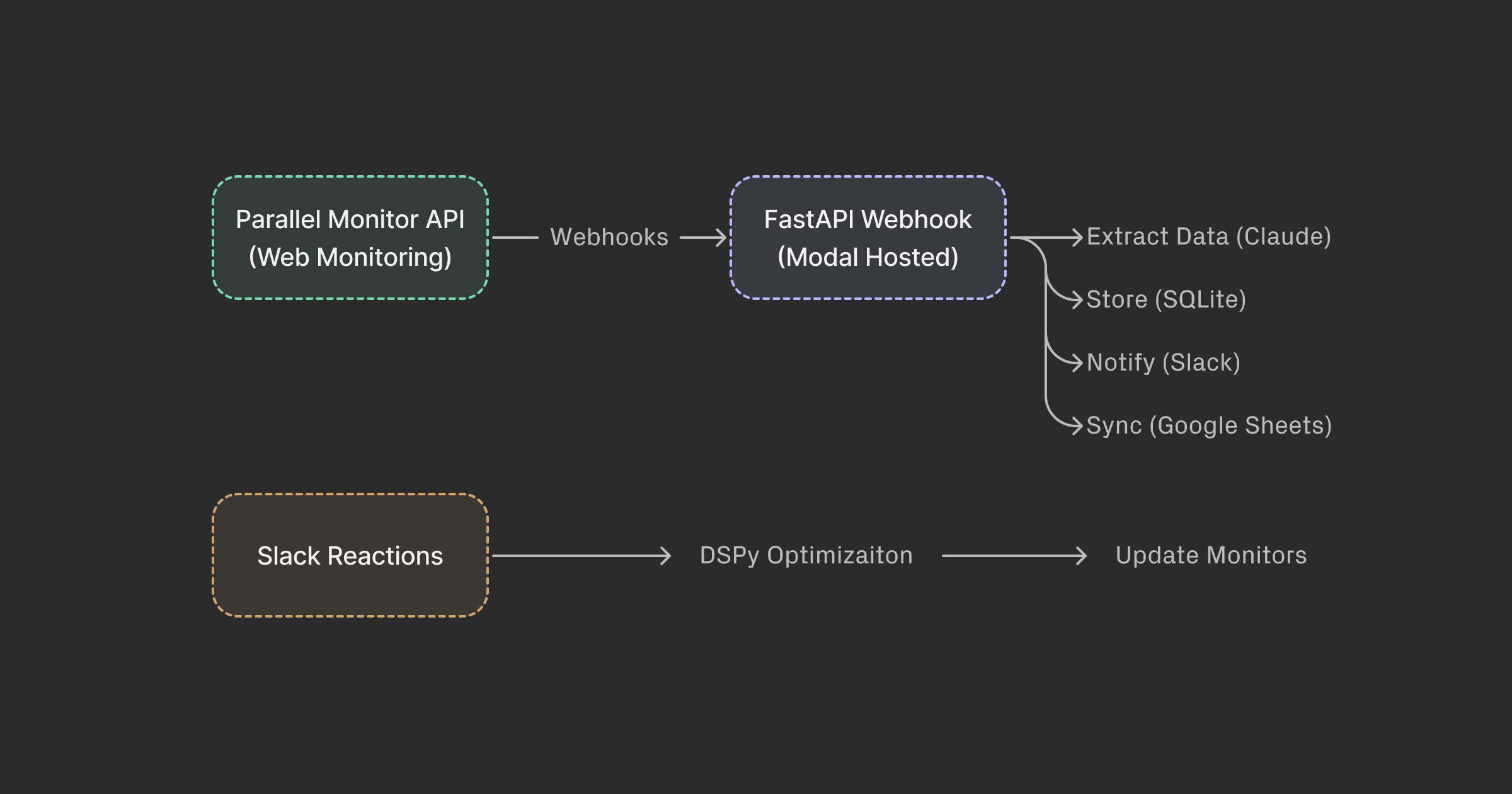

The initial architecture followed this sequence: Parallel Monitor API -> Webhook Handler -> Claude Extraction -> Slack notification.

Key components:

- Parallel monitors the web continuously via its Monitor API, delivering webhooks upon finding relevant content

- Modal hosts a FastAPI webhook handler with persistent storage

- Claude extracts structured information from messy web content

- Slack distributes extracted use case details to team members

Initial monitors tracked broad queries:

- LangChain agent integration

- AI agent with Salesforce

- CrewAI Gmail agent

- AI agent email automation

Day 1.1: Collecting Feedback for the Agentic Researcher

The simple pipeline functioned but generated excessive irrelevant results. The system lacked evaluation mechanisms, making it impossible to measure effectiveness. The solution involved gathering team feedback through low-friction emoji reactions on Slack messages:

- Fire emoji = 5 points

- Thumbs up = 1 point

- Thumbs down = -1 point

The Slack Events API subscription captures reaction events, extracts message timestamps, matches content to use cases stored in SQLite, and records feedback signals. This approach transformed the team from passive consumers into active improvement contributors.

Day 1.2: Making the Agent Learn

DSPy provided the framework for prompt optimization based on collected feedback. The challenge: the Parallel Monitor API operates asynchronously via webhooks, preventing real-time query testing. The solution used embedding similarity as a proxy:

- Sentence transformers embed both use-cases and queries

- Cosine similarity ranks use-case relevance to queries

- Reaction data combined with similarity scores generates reward signals

- DSPy optimizes monitor queries to maximize similarity with positively-rated content

A weekly scheduled job reviews accumulated reactions, optimizes queries, and updates monitors every Monday morning.

Day 2: Consolidating the Agentic Researcher Monitors

After one week, sparse feedback signals across granular monitors hindered learning effectiveness. The consolidation strategy reduced monitors to two categories:

- Real-time social (daily updates): Reddit, Twitter, GitHub, Stack Overflow

- Evergreen content (weekly updates): Blogs, tutorials, documentation

This broader approach captured wider use-case ranges, increasing reaction frequency and providing richer optimization datasets. Additional improvements included:

- Slack commands for manual re-optimization triggering

- Monitor query visibility for team oversight

- Google Sheets synchronization for simplified use-case analysis

- Automatic filtering removing negatively-rated content

Final Architecture

The complete system flows as follows:

Parallel Monitor API -> Webhooks -> FastAPI Webhook Handler (Modal) -> Claude Extraction + SQLite Storage -> Slack Notifications + Google Sheets Sync

Feedback Loop: Slack Reactions -> DSPy Optimization -> Monitor Updates

Key Takeaway

It’s really easy to build agentic systems. The actual complexity lies in data collection and evaluation frameworks. This implementation minimized friction by using existing team infrastructure (Slack) for feedback signals, ultimately demonstrating that motivation for system improvement drives participation and effectiveness.